Results v1

In this post, we will cover:

- Recap

- Results of the experiment

- Challenges/Limitations

- Improvements to be made

Recap

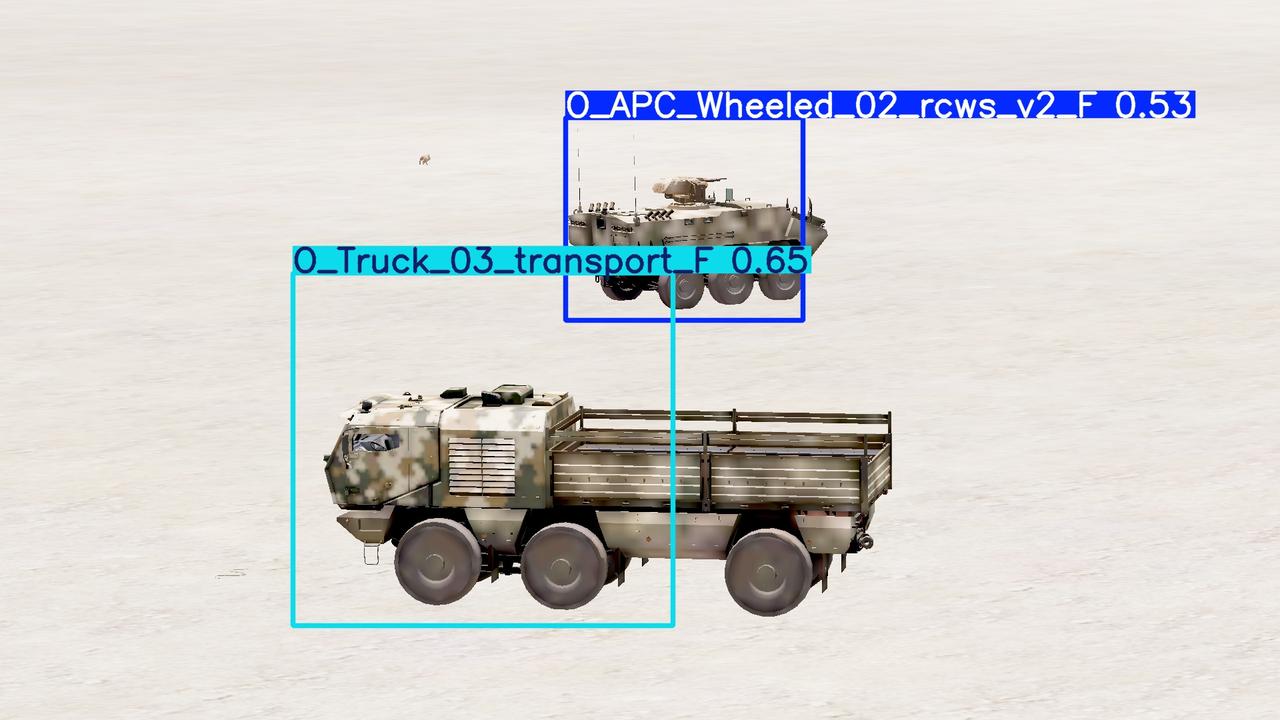

We trained using YOLO v8n, using a dataset of 105 in-game images. We gave it 3 classes of vehicles, MRAP, APC, and Transport Truck.

Images of vehicles were taken in different places, though all were done in bright daylight. Distances of vehicles from camera also varied. Most were taken from a drone, so they were mostly top-down view.

However, do keep in mind that our bounding boxes are still rather inaccurate, with it tending to only “focus” on the front, and ignoring the rear of the vehicle.

Results of the experiment

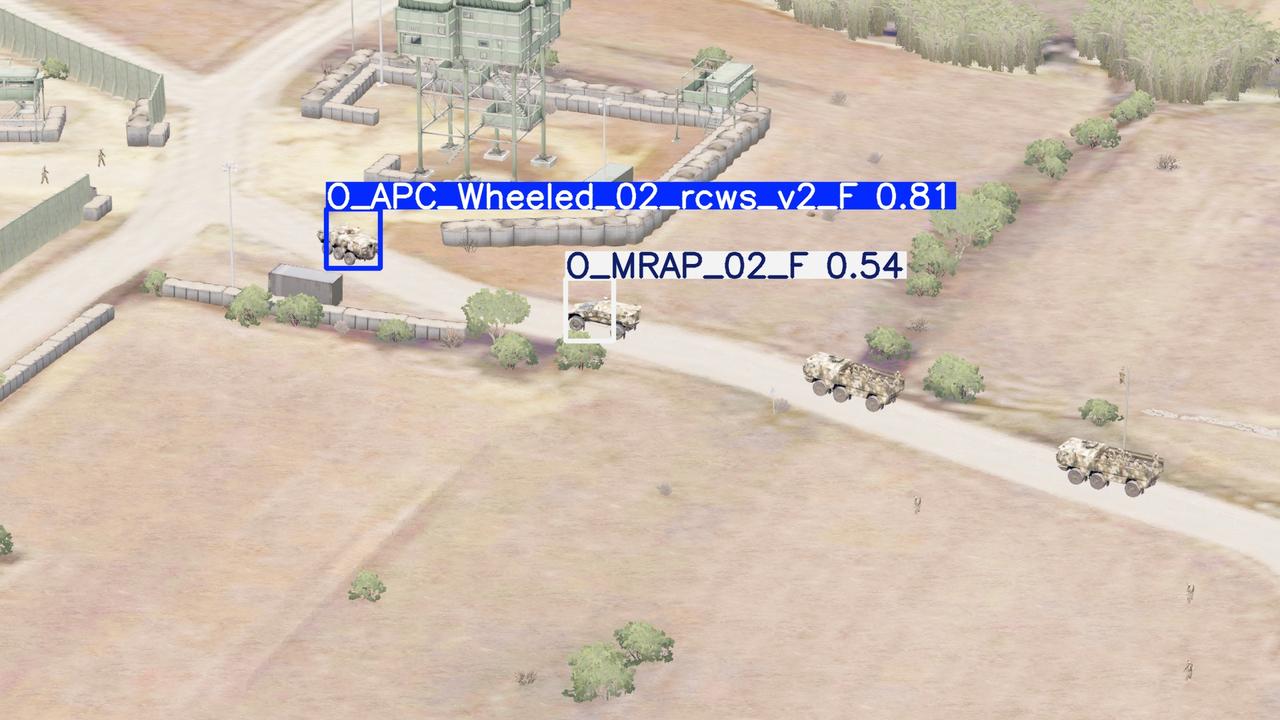

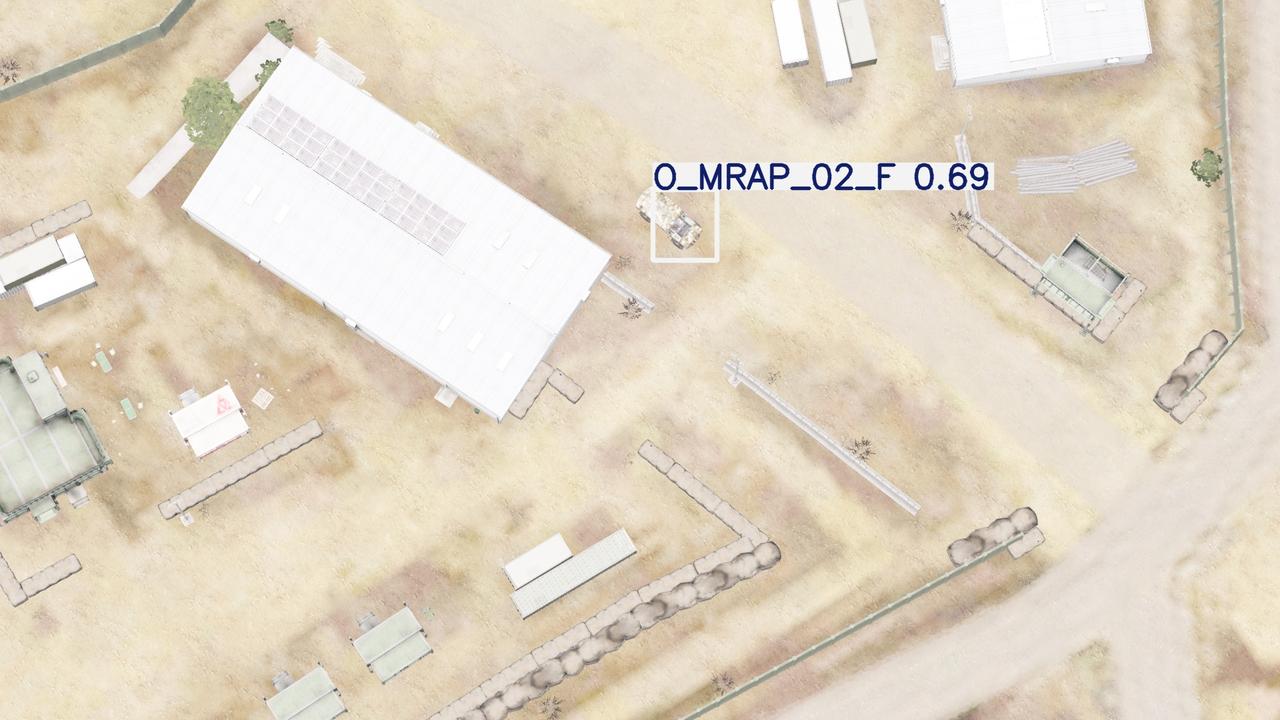

As with all things in life, there are always successes and failures. Unfortunately, this turned out to be more failure than success. Though it could detect vehicles in different scenes, the detection rate was rather spotty. Having said that, let’s view the samples of the successful detections first. Do note that, with the exception of the first image, the images used below are taken in a different scene from the training images.

Now, let us see the failures.

Observe the following image. Note how the cab of the transport truck is blocked by a light pole, and how the model is unable to detect the truck. Confidence threshold was set very low, as evident from the man detected as a transprot truck right beside it.

Next, observe the image below. Note that the front transport truck, with the rear slightly blocked by vegetation, is able to be detected, whereas the transport truck behind it, with the front cab partially blocked by a light pole, is not.

Challenges/Limitations

As evident from the 2 example failures, the model is only able to recognise the front portion of the vehicle. The rear of the vehicle does not matter.

This is most likely due to the very rough bounding boxes given.

Furthermore, the small obstruction disrupting the detection may suggest insufficient training data.

Improvements to be made

Improved bounding boxes

This is the most important improvement that we need to have. As evident from the above experiment, the model is only trained to look for the “front” of the vehicle, rather than the whole vehicle. This means that vehicles with the front partially obstructed will not be detected by the model.

Varying time and view

As this was only a trial, we did not vary the time of day and the viewing position when taking screenshots. To improve this, we can do mulitple batches of screenshots at different time of day, to fully harness the variability that Arma 3 provides.

Furthermore, we will also have to take the images from a different viewpoint, such as at human-height. Using drones was prefered as it was fast and maneuverable. To gather images at human-height, we can make use of the existing light-strike vehicles in Arma 3 to speed around the different vehicle models placed. This will allow us to further vary our training data.