Results v2

In this post, we will cover:

- Recap

- Results of the experiment

- Challenges/Limitations

- Improvements to be made

Recap

We trained using YOLO v8n, using a dataset of 252 in-game images. We gave it the following classes:

# Classes

names:

0: LSV

1: MRAP

2: Pickup_Truck

3: Civvie_Car

4: Misc

Images of vehicles were taken in different places, though all were done in bright daylight. Distances of vehicles from camera also varied. Most were taken from a drone, so they were mostly top-down view.

Results of the experiment

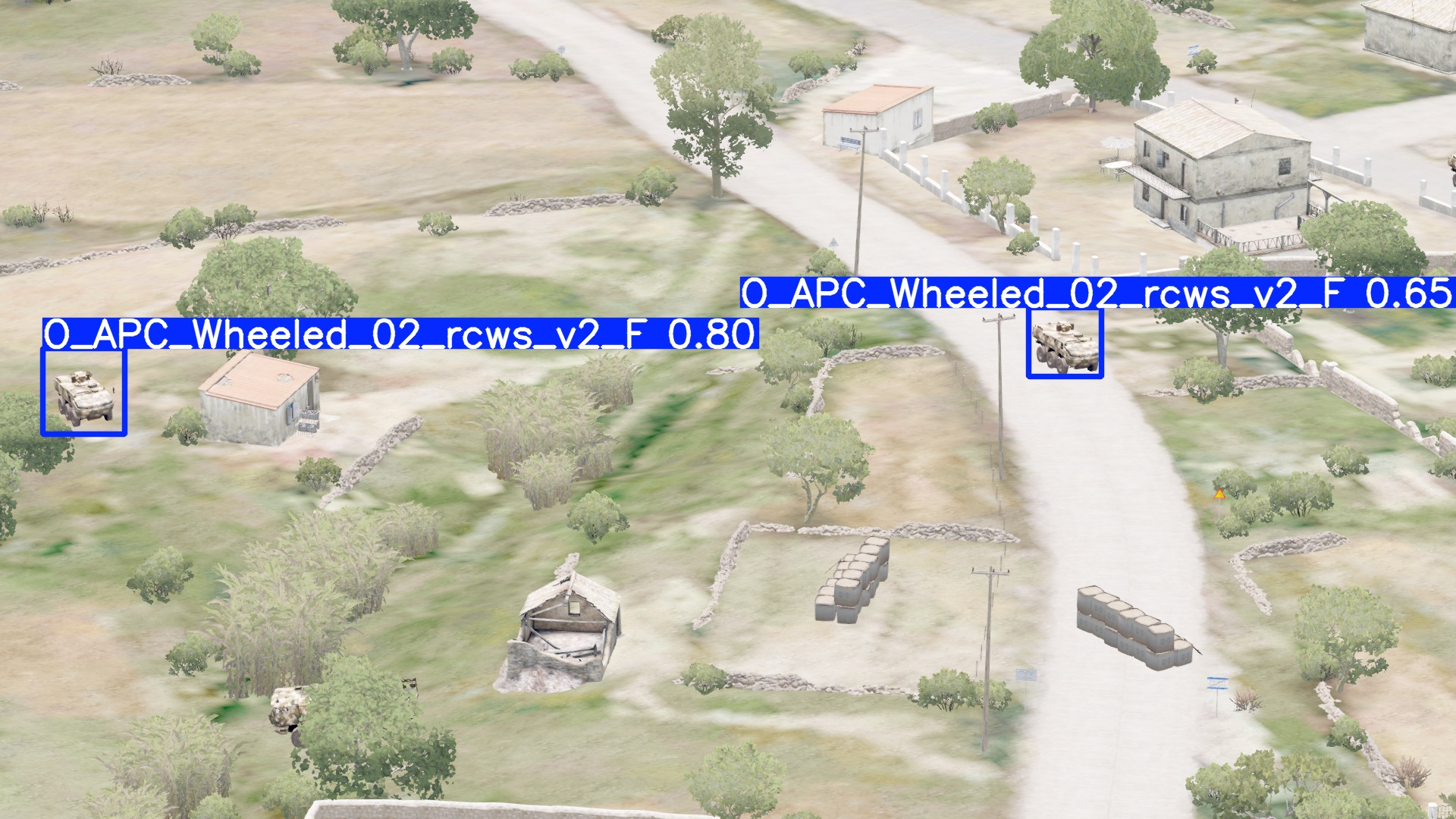

(Seperate model, training data same but difference in classes) Testing on an in-game image, we are able to verify that the model now recognises the whole vehicle. Unfortunately, it doesn’t detect the front cab of a transport truck in the bottom left corner (the back is obscured by vegetation).

Recall that one of the main objectives is to run this model on real-life data.

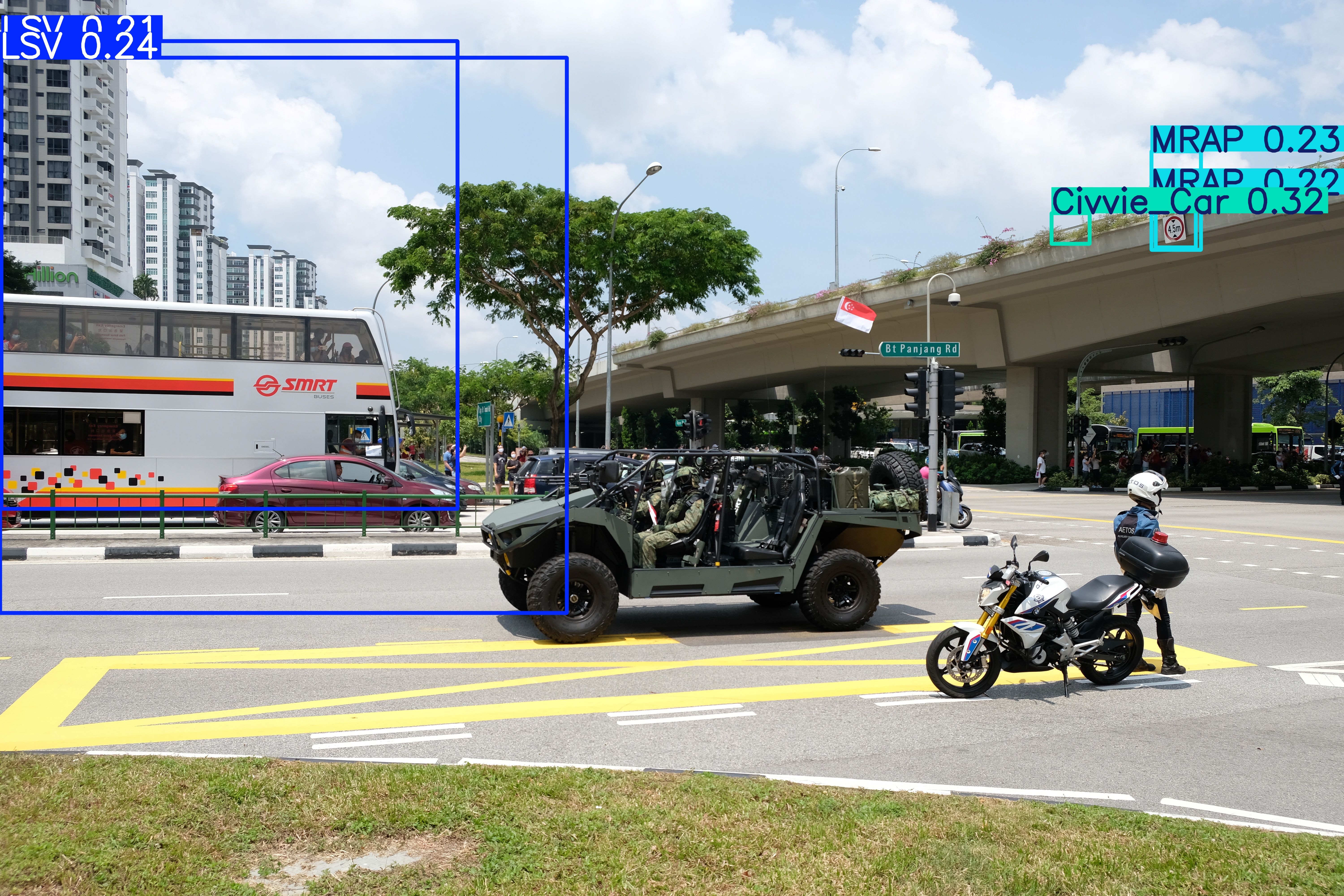

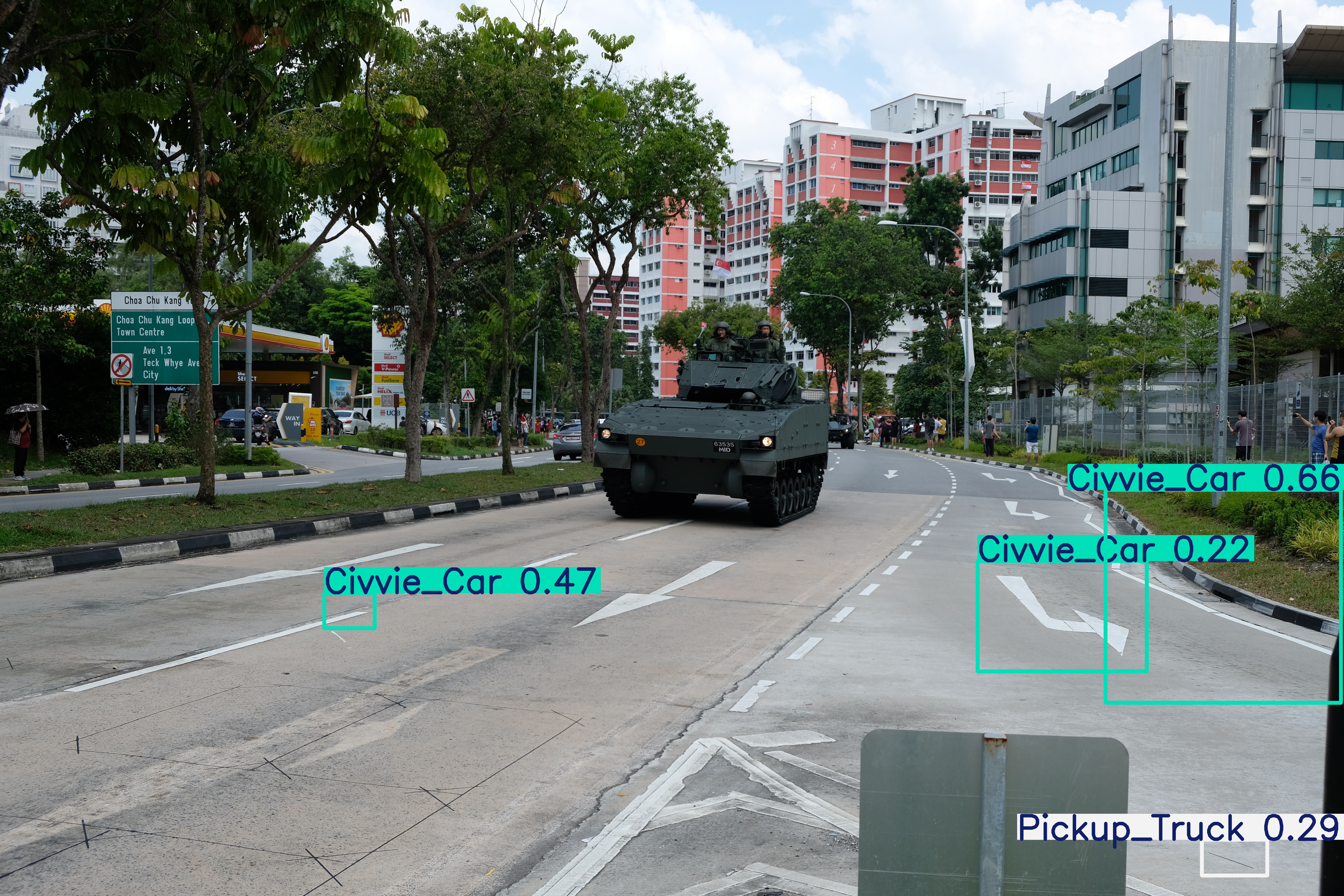

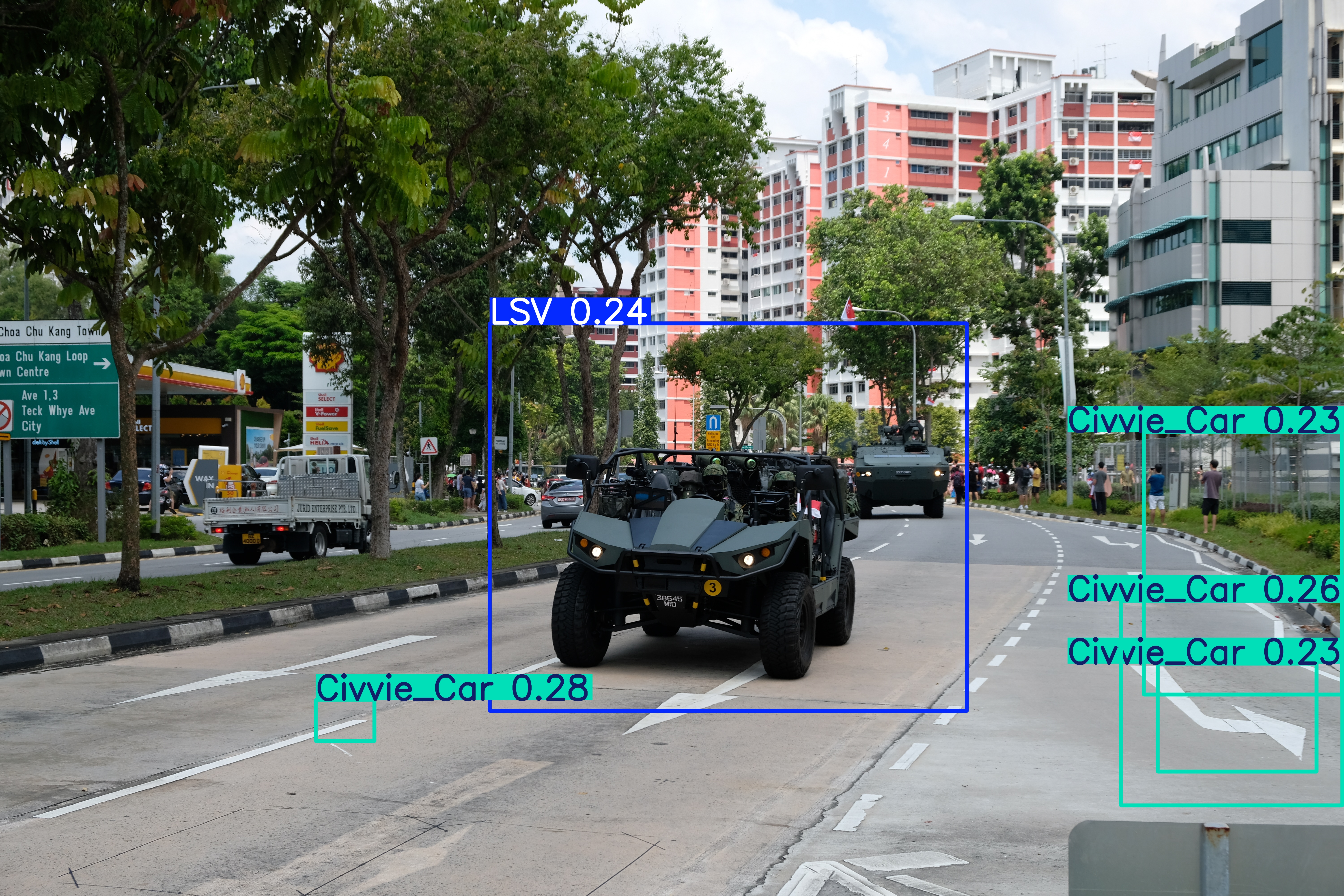

We have a small dataset of images of Singapore’s military assets, mainly consiting of the Light Strike Vehicle Mark 2. Images were mostly taken with a front or side-profile, taken at street level.

We only really expect it to detect the LSV, since Arma 3’s Qilin is based on Singapore’s Light Strike Vehicle Mark 2.

Unfortunately, while it occasionally can detect the LSV, it is highly unreliable and even if it detects the LSV, it is with low confidence ratings. Furthermore, there are multiple instances where background objects are mistakenly being detected.

Challenges/Limitations

- The low confidence rating and low detection rate is undesirable.

- Erronous detection of background objects as vehicles is also undesireable.

Improvements to be made

Mixing with real-life data

The low confidence score and erronous detection may be because the model has not been exposed to real-life images yet. Training data for future models will include a reasonable amount of real-life images. While we are trying to avoid using real-life images as far as possible, it is unavoidable that some real-life images have to be used to augment the training data.